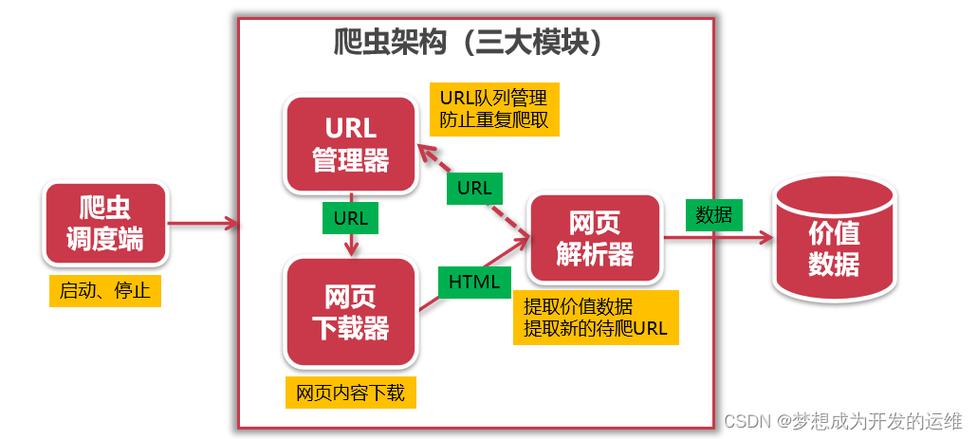

PPT信息,详细先容下爬取Cacti监控项的源码

有人评论须要源码的,这不立马写篇文章附上源码:

#! python2.7

# -- encoding:utf-8 --

import os

import urllib2

import cookielib

import urllib

import re

import time

import datetime

import warnings

import csv

from openpyxl import load_workbook

from openpyxl.styles import Font, Alignment, Border, Side

# python2 环境开拓

#输入 日期 景象 热点事宜

def write_24sr01(read_csv):

#格式化流量

read_list = list(read_csv)

required_col = [4, 2, 3, 5, 6]

results = [0] 5

for each_line in read_list[10:]:

for i in range(0, 5):

if len(each_line):

x = float(each_line[required_col[i]])

if x > results[i]:

results[i] = x

for i in range(0, 5):

results[i] = round(results[i]/100, 4)

cell = ws.cell(row=5, column=i 2 + 2) #存储相应的值到excel表中。

cell.value = results[i]

cell.font = font1

cell.alignment = align

cell.number_format = num_format

cell.border = border

def write_24sr02(read_csv):

read_list = list(read_csv)

required_col = 5 #原数据增加了4列导致数据不全 (10列数据取第6列)

result = 0

for each_line in read_list[10:]:

if len(each_line):

x = float(each_line[required_col])

if x > result:

result = x

result = round(result / 1000000000, 2)

cell = ws.cell(row=5, column=11, value=result)

cell.font = font1

cell.alignment = align

cell.border = border

def write_21sr01(read_csv):

# print(read_csv)

read_list = list(read_csv)

# print(read_list)

required_col = 10 #csv的第10列数值是我们须要的流量,流量比拟出峰值。

result = 0

for each_line in read_list[10:]:

if len(each_line):

x = float(each_line[required_col])

if x > result:

result = x

result = round(result/1000000000, 2)

# print(result)

cell = ws.cell(row=5, column=12, value=result)

cell.font = font1

cell.alignment = align

cell.border = border

def write_cacti(read_csv,tag,fenmu):

read_list = list(read_csv)

required_col = tag #tag是列数

results = 0

# results = [0] 5 #得到列表[0, 0, 0, 0, 0] 得到5行数据

for each_line in read_list[10:]: #从第10行开始

if len(each_line):

x = float(each_line[required_col])

if x > results:

results = x

results = round(results / fenmu, 2)

return results

if __name__ == '__main__':

warnings.filterwarnings('ignore')

today = datetime.datetime.now()

yesterday = today + datetime.timedelta(days=-1)

yesterday_str = str(yesterday.strftime("%Y-%m-%d"))

# 输入 日期 景象 热点事宜

print u"请输入日期,如 2017-01-01"

print u"或者直接回车利用昨天日期" + yesterday_str

query_day_list = []

while len(query_day_list) == 0:

input_day = raw_input(":")

if input_day == '':

query_day_list = [yesterday_str]

print u'利用默认日期:' + yesterday_str

else:

query_day_list = re.findall(r'(2[0-9]{3}-[0-1][0-9]-[0-3][0-9])', input_day)

# 判断日期格式

if len(query_day_list) == 0:

print u'格式缺点'

else:

try: #实在是巡检的前一天的

date_check = datetime.datetime.strptime(query_day_list[0], "%Y-%m-%d")

# 判断日期合法

except Exception as e:

print u'日期缺点'

query_day_list = []

query_day = query_day_list[0]

print u"请输入当天巡检员"

name = raw_input(":")

print u"请输入当天景象"

weather = raw_input(":")

print u"请输入当天热点事宜"

hot_thing = raw_input(":")

query_day = yesterday_str

cookie = cookielib.CookieJar()

handler = urllib2.HTTPCookieProcessor(cookie)

post_opener = urllib2.build_opener(handler) #得到句柄实例

postUrl = 'http://ip/index.php' #IP根据你Cacti 网址更新

username = 'username'

password = 'password'

postData = {

'action': 'login',

'login_password': password,

'login_username': username,

}

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,/;q=0.8',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US',

'Connection': 'keep-alive',

'Host': 'ip',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:61.0) Gecko/20100101 Firefox/61.0'

}

data = urllib.urlencode(postData)

request0 = urllib2.Request('http://ip/') #IP根据你Cacti 网址更新

response0 = post_opener.open(request0)

request2 = urllib2.Request(postUrl, data, headers)

response2 = post_opener.open(request2)

# 登录CartiEZ成功

start_time = time.strptime(query_day, "%Y-%m-%d")

start_time_float = time.mktime(start_time)

star_time_int = int(start_time_float)

end_time_int = star_time_int + (24 60 60 - 60)

start_time_str = str(star_time_int)

end_time_str = str(end_time_int)

graph_codes = [ #graph_codes通过Cacti的网址剖析得出来的,每次点击流量是通过Get办法获取到相应code的图表。

'1134', '3461', '3129',

'3159', '3351', '2679','4145','4146',

'4070', '4068','3313', '3314', #防火墙

'2299', '2847', '2842', '2843',

'2844', '2845', '2264', '2269',

# '215' 监视器直接拿到源码,对源码的red.gif 还是green.gif 进行判断

]

host_url = 'http://ip/graph_xport.php' # 是剖析html源码或者浏览器开拓者模式抓包得来。

hostIp = 'file_server' #将生产的excel结果保存到远端文件做事器中

sharePath = r'\\巡检目录\\isp_xunjian'.decode('utf-8')

filename = r'巡检记录-2020.xlsx'.decode('utf-8')

srcFilename = r'\\' + hostIp + sharePath + '\\' + filename

#获取文档r表示不须要转义

# print srcFilename.encode('utf-8') #ces

today = datetime.datetime.now()

yesterday = today + datetime.timedelta(days=-1)

yesterday_str = str(yesterday.strftime("%Y-%m-%d"))

dir_path = r'\\' + hostIp + sharePath + '\\' + yesterday_str

if not os.path.exists(dir_path):

os.makedirs(dir_path)

wb = load_workbook(srcFilename)

ws = wb.active # 不用sheet = wb['Shett1']

font1 = Font(size=9, )

align = Alignment(horizontal='center')

num_format = '0.00%'

side = Side(style='thin', color="000000")

border = Border(left=side, right=side, top=side, bottom=side)

rows = ws.max_row

cols = ws.max_column

col_null = 0

result = []

for i in range(0, 20):

get_param = {

'graph_end': end_time_str,

'graph_start': start_time_str,

'local_graph_id': graph_codes[i],

'rra_id': '0',

'view_type': 'tree'

}

get_url = urllib.urlencode(get_param)

full_url = host_url + '?' + get_url

# print (full_url) # 须要测试用

response = post_opener.open(full_url)

csv_file = csv.reader(response)

if graph_codes[i] == '1134':

result.append(write_cacti(csv_file,3,1000)) #这里把稳CSV处理列从0开始的 #csv对应作为参数

elif graph_codes[i] == '3461' or graph_codes[i] =='3129' :

result.append(write_cacti(csv_file, 2,1000000000)) # 第二个参数2表示第三列值,第三个参数表示流量以G为单位

elif graph_codes[i] == '3159':

result.append(write_cacti(csv_file, 1, 1))

elif graph_codes[i] == '3351' or graph_codes[i] == '2679' or graph_codes[i] == '4145' or graph_codes[i] == '4146' or graph_codes[i] == '4070' or graph_codes[i] == '4068' \

or graph_codes[i] == '3313' or graph_codes[i] == '3314' :

result.append(write_cacti(csv_file, 2, 1000000000))

elif graph_codes[i] == '2299' or graph_codes[i] == '2847':

result.append(write_cacti(csv_file, 25, 1000000000))

elif graph_codes[i] == '2842' or graph_codes[i] == '2843' or graph_codes[i] == '2844' or graph_codes[i] == '2845' \

or graph_codes[i] == '2264' or graph_codes[i] == '2269':

result.append(write_cacti(csv_file, 1, 1000000000))

for m in range(2,12): #格式化Excel单元格

cell = ws.cell(row=m, column=cols+1)

cell.font = font1

cell.alignment = align

cell.border = border

ws.cell(row=2, column=cols+1).value = time.strftime("%Y-%m-%d", time.localtime()) #日期

ws.cell(row=3, column=cols+1).value = name

ws.cell(row=4, column=cols+1).value = weather

ws.cell(row=5, column=cols+1).value = hot_thing

ws.cell(row=6, column=cols+1).value = result[0]

ws.cell(row=7, column=cols+1).value = u'24-SR:' + str(result[1]) + u'4' + '\n' + u'21-SR:' + str(result[2]) + u'2'

ws.cell(row=8, column=cols+1).value = result[3]

ws.cell(row=9, column=cols+1).value = u'山石:' + str(result[4]+ result[5]) + u'\n' + u'华为:' + str((result[6]+ result[7])/2) + u'\n' + u'郊县-01:' + str(result[8]+ result[9]) + u'\n' + u'郊县-02:' + str(result[10]+ result[11])

ws.cell(row=10, column=cols+1).value = u'IN-MAX:' + str(result[12]) + u'\n' + u'OUT-MAX:' + str(result[13])

ws.cell(row=11, column=cols+1).value = u'XH-ae29:' + str(result[14] + result[15]) + u'\n' + u'BJ-ae27:' + str(result[16] + result[17]) + u'\n' + u'SD-ae8:' + str(result[18] + result[19])

#view-source:http://ip/graph_xport.php?local_graph_id=3540&rra_id=0&view_type=tree&graph_start=1534055781&graph_end=1534660581

#网页元素或源码剖析可获取到如上关键链接,Cacti每5分钟采集一次的数据存储在csv文件里。我们须要读取csv的相应数据。

pic_names = [

r'\拨号并发数.png', r'\SR流量监控-1.png', #导出你须要的流量图

]

host_url = 'http://ip/graph_image.php'

for i in range(0, 20):

get_param = {

'graph_end': end_time_str,

'graph_start': start_time_str,

'local_graph_id': graph_codes[i],

'rra_id': '0',

'view_type': 'tree'

}

get_url = urllib.urlencode(get_param)

full_url = host_url + '?' + get_url

response = post_opener.open(full_url)

pic = response.read()

f = open(dir_path + pic_names[i].decode('utf-8'), 'wb')

f.writelines(pic)

f.close()

print '天生'.decode('utf-8') + dir_path + pic_names[i].decode('utf-8')

time.sleep(5)

#须要

try:

wb.save(srcFilename)

except Exception as e:

print str(e)

对应代码这里提出如下问题,供大家思考动动手:

Cacti监控项的主机状态提取出来。zabbix的怎么取值,给个范例。(后面一节我会公布自己的脚本)网络方面感兴趣或者对Python感兴趣的同学可以持续关注我,我会禁绝时发布事情中碰着的编程案例或者网络故障案例。一起互换学习。