Since you can use this share from multiple threads, and libcurl has no internal thread synchronization, you must provide mutex callbacks if you’re using this multi-threaded. You set lock and unlock functions with curl_share_setopt too.

可以参考这两处例子:

curl自带的例子还是先容的curl_multi_fdset的方法。实际上已经可以用curl_multi_wait代替了,听说是facebook的工程师提的升级:Facebook contributes fix to libcurl’s multi interface to overcome problem with more than 1024 file descriptors.利用方法可以参考这里

通过一个示例来看下multi的事情办法(把稳出于简短的目的,有的函数没有判断返回值)

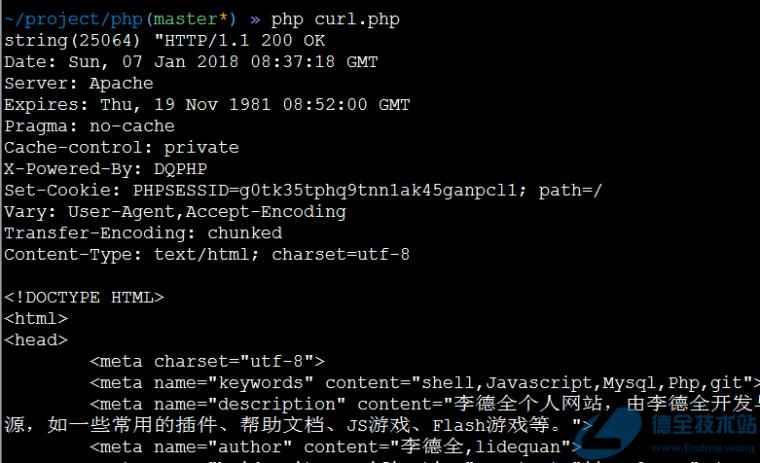

#include "curl/curl.h"#include <string>const char url = "http://www.baidu.com";const int count = 1000;size_t write_data(void buffer, size_t size, size_t count, void stream) { (void)buffer; (void)stream; return size count;}void curl_multi_demo() { CURLM curlm = curl_multi_init(); for (int i = 0; i < count; ++i) { CURL easy_handle = curl_easy_init(); curl_easy_setopt(easy_handle, CURLOPT_NOSIGNAL, 1); curl_easy_setopt(easy_handle, CURLOPT_URL, url); curl_easy_setopt(easy_handle, CURLOPT_WRITEFUNCTION, write_data); curl_multi_add_handle(curlm, easy_handle); } int running_handlers = 0; do { curl_multi_wait(curlm, NULL, 0, 2000, NULL); curl_multi_perform(curlm, &running_handlers); } while (running_handlers > 0); int msgs_left = 0; CURLMsg msg = NULL; while ((msg = curl_multi_info_read(curlm, &msgs_left)) != NULL) { if (msg->msg == CURLMSG_DONE) { int http_status_code = 0; curl_easy_getinfo(msg->easy_handle, CURLINFO_RESPONSE_CODE, &http_status_code); char effective_url = NULL; curl_easy_getinfo(msg->easy_handle, CURLINFO_EFFECTIVE_URL, &effective_url); fprintf(stdout, "url:%s status:%d %s\n", effective_url, http_status_code, curl_easy_strerror(msg->data.result)); curl_multi_remove_handle(curlm, msg->easy_handle); curl_easy_cleanup(msg->easy_handle); } } curl_multi_cleanup(curlm);}int main() { curl_multi_demo(); return 0;}

把稳L35~36 L37~38不能互换,否则url为空,缘故原由没有连续深追。

可以看到异步处理的办法是通过curl_multi_add_handle接口不断的把待抓的easy handle放到multi handle里。然后通过curl_multi_wait/curl_multi_perform等待所有easy handle处理完毕。由于是同时在等待所有easy handle处理完毕,耗时比easy办法里逐个同步等待大大减少。个中产生hold浸染的是在这段代码里:

//等待所有easy handle处理完毕do { ...} while (running_handlers > 0);3.运用

而在实际运用中,我们的利用场景每每是这样的:

某个卖力抓取数据的模块,service监听端口吸收url,抓取数据后发往下贱。

由于不肯望所有的线程都处于上面multi示例中的等待(什么都不做)。于是就有了这种想法:吸收到一条url后布局对应的easy handle,通过curl_multi_add_handle接口放入curlm后返回。同时两个线程不断的wait/perform和read,如果有完成的url,那么就调用对应的回调函数即可。相称于将curlm当做一个行列步队,两个线程分别充当了生产者和消费者。

模型类似于:

CURLM curlm = curl_multi_init()//Thread-Consumerwhile true://读取已经完成的urlcurl_multi_info_read//关照该url已完成,并且从curlm里删除curl_multi_remove_handle//Thread-Producerwhile true://等待可读socketcurl_multi_wait//查看运行中的easy handle数目curl_multi_perform//Thread-Other//根据url布局CURL easy handleCURL curl = make_curl_easy_handle(url)//添加到curlmcurl_multi_add_handle

做了一下测试很快就放弃了,程序在libcurl内部函数里core掉。实际上curl是线程安全的,但同时也格外强调了这点:

The first basic rule is that you must never share a libcurl handle (be it easy or multi or whatever) between multiple threads. Only use one handle in one thread at a time.

详细可以参考这里,解释上面的模型是不可行的。

看到这里有一个基于libcurl的单线程I:O多路复用HTTP框架,dispatch部分和chrome源码里的thread模型很像,CURLM工具在Dispatch::IO线程里统一操作,同时全局唯一,在一个LazyInstance的ConnectionRunner里掩护。不过没有找到dispatch_after的实现,以是不太确定。

在StackOverflow上看到了复用curl的想法:curl handler放在一个池子中,须要时从中获取,利用后归还,同样不可行。

因此,标准的写法便是之前的示例的代码,正如这里提到的:

The multi interface is designed for this purpose: you add multiple handles and then process all of them with one call, all in the same thread.

4.优化接下来便是优化的问题,在不该用curl_multi_socket_的接口的情形下,是否有办法提升性能呢?参考了curl的Persistence一节,紧张是持久化部分信息来加速(缓存)。个中提到

Each easy handle will attempt to keep the last few connections alive for a while in case they are to be used again.

这里说到每个easy handle会缓存之前的多少连接来避免重连、缓存DNS等以提高性能。因此一些思路便是easy handle重用、dns全局缓存等。

5.测试&结论按照上面的思路分别测试抓取1000次baidu首页

串行利用curl easy接口10个线程并行利用curl easy接口利用curl multi接口利用curl multi接口,并且reuse connection。(方法是第一遍curl easy handle抓取后不cleanup,打算第二次全部抓取完成的韶光)利用dns cache(利用curl_share_接口,第一次抓取用于dnscache添补,打算第二次全部抓取完成的韶光。效果上打开VERBOSE可以看到hostname found in DNS Cache)个中处理韶光测试结论如下:

methodavgmaxmineasy16.45721.61714.262easy parallel2.3319.4961.723multi0.7348.8950.259multi reuse0.001130.0015570.000898multi reuse cache0.001090.001400.000828

4 5的差异不大,同时不愿定重用connection的情形下,dnscache是否还能起到正向浸染

对应的测试代码都放到了gist上:1 2 3 4 5

6.补充关于curl性能的谈论帖子很多,比如这里,个中也讲到了获取网页的一个基本流程:

Request from your DNS server, the IP corresponding to the name of the site you requestedUse the server’s reply to open a socket to that IP, port 80Send a small HTTP message describing what you wantReceive the html code这里有一些关于performance的建议,用到了curl_multi_socket_接口,我没有用到。