转自:公众号:TonyBai

参考:go措辞中文文档;www.topgoer.com

// 来自https://tip.golang.org/pkg/net/http/#example_ListenAndServepackage mainimport ( "io" "log" "net/http")func main() { helloHandler := func(w http.ResponseWriter, req http.Request) { io.WriteString(w, "Hello, world!\n") } http.HandleFunc("/hello", helloHandler) log.Fatal(http.ListenAndServe(":8080", nil))}

go net/http包是一个比较均衡的通用实现,能知足大多数gopher 90%以上场景的须要,并且具有如下优点:

不过也正是由于http包的“均衡”通用实现,在一些对性能哀求严格的领域,net/http的性能可能无法胜任,也没有太多的调优空间。这时我们会将眼力转移到其他第三方的http做事端框架实现上。而在第三方http做事端框架中,一个“行如其名”的框架fasthttp[1]被提及和采纳的较多,fasthttp官网流传宣传其性能是net/http的十倍(基于go test benchmark的测试结果)。fasthttp采取了许多性能优化上的最佳实践[2],尤其是在内存工具的重用上,大量利用sync.Pool[3]以降落对Go GC的压力。那么在真实环境中,到底fasthttp能比net/http快多少呢?恰好手里有两台性能还不错的做事器可用,在本文中我们就在这个真实环境下看看他们的实际性能。

2. 性能测试我们分别用net/http和fasthttp实现两个险些“零业务”的被测程序:

nethttp:// github.com/bigwhite/experiments/blob/master/http-benchmark/nethttp/main.gopackage mainimport ( _ "expvar" "log" "net/http" _ "net/http/pprof" "runtime" "time")func main() { go func() { for { log.Println("当前routine数量:", runtime.NumGoroutine()) time.Sleep(time.Second) } }() http.Handle("/", http.HandlerFunc(func(w http.ResponseWriter, r http.Request) { w.Write([]byte("Hello, Go!")) })) log.Fatal(http.ListenAndServe(":8080", nil))}fasthttp:

// github.com/bigwhite/experiments/blob/master/http-benchmark/fasthttp/main.gopackage mainimport ( "fmt" "log" "net/http" "runtime" "time" _ "expvar" _ "net/http/pprof" "github.com/valyala/fasthttp")type HelloGoHandler struct {}func fastHTTPHandler(ctx fasthttp.RequestCtx) { fmt.Fprintln(ctx, "Hello, Go!")}func main() { go func() { http.ListenAndServe(":6060", nil) }() go func() { for { log.Println("当前routine数量:", runtime.NumGoroutine()) time.Sleep(time.Second) } }() s := &fasthttp.Server{ Handler: fastHTTPHandler, } s.ListenAndServe(":8081")}

对被测目标履行压力测试的客户端,我们基于hey[4]这个http压测工具进行,为了方便调度压力水平,我们将hey“包裹”不才面这个shell脚本中(仅适于在linux上运行):

// github.com/bigwhite/experiments/blob/master/http-benchmark/client/http_client_load.sh# ./http_client_load.sh 3 10000 10 GET http://10.10.195.181:8080echo "$0 task_num count_per_hey conn_per_hey method url"task_num=$1count_per_hey=$2conn_per_hey=$3method=$4url=$5start=$(date +%s%N)for((i=1; i<=$task_num; i++)); do { tm=$(date +%T.%N) echo "$tm: task $i start" hey -n $count_per_hey -c $conn_per_hey -m $method $url > hey_$i.log tm=$(date +%T.%N) echo "$tm: task $i done"} & donewaitend=$(date +%s%N)count=$(( $task_num $count_per_hey ))runtime_ns=$(( $end - $start ))runtime=`echo "scale=2; $runtime_ns / 1000000000" | bc`echo "runtime: "$runtimespeed=`echo "scale=2; $count / $runtime" | bc`echo "speed: "$speed

该脚本的实行示例如下:

bash http_client_load.sh 8 1000000 200 GET http://10.10.195.134:8080http_client_load.sh task_num count_per_hey conn_per_hey method url16:58:09.146948690: task 1 start16:58:09.147235080: task 2 start16:58:09.147290430: task 3 start16:58:09.147740230: task 4 start16:58:09.147896010: task 5 start16:58:09.148314900: task 6 start16:58:09.148446030: task 7 start16:58:09.148930840: task 8 start16:58:45.001080740: task 3 done16:58:45.241903500: task 8 done16:58:45.261501940: task 1 done16:58:50.032383770: task 4 done16:58:50.985076450: task 7 done16:58:51.269099430: task 5 done16:58:52.008164010: task 6 done16:58:52.166402430: task 2 doneruntime: 43.02speed: 185960.01

从传入的参数来看,该脚本并行启动了8个task(一个task启动一个hey),每个task向http://10.10.195.134:8080建立200个并发连接,并发送100w http GET要求。我们利用两台做事器分别放置被测目标程序和压力工具脚本:

目标程序所在做事器:10.10.195.181(物理机,Intel x86-64 CPU,40核,128G内存, CentOs 7.6)$ cat /etc/redhat-releaseCentOS Linux release 7.6.1810 (Core) $ lscpuArchitecture: x86_64CPU op-mode(s): 32-bit, 64-bitByte Order: Little EndianCPU(s): 40On-line CPU(s) list: 0-39Thread(s) per core: 2Core(s) per socket: 10座: 2NUMA 节点: 2厂商 ID: GenuineIntelCPU 系列: 6型号: 85型号名称: Intel(R) Xeon(R) Silver 4114 CPU @ 2.20GHz步进: 4CPU MHz: 800.000CPU max MHz: 2201.0000CPU min MHz: 800.0000BogoMIPS: 4400.00虚拟化: VT-xL1d 缓存: 32KL1i 缓存: 32KL2 缓存: 1024KL3 缓存: 14080KNUMA 节点0 CPU: 0-9,20-29NUMA 节点1 CPU: 10-19,30-39Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb cat_l3 cdp_l3 intel_pt ssbd mba ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts pku ospke spec_ctrl intel_stibp flush_l1d压力工具所在做事器:10.10.195.133(物理机,鲲鹏arm64 cpu,96核,80G内存, CentOs 7.9)

# cat /etc/redhat-release CentOS Linux release 7.9.2009 (AltArch)# lscpuArchitecture: aarch64Byte Order: Little EndianCPU(s): 96On-line CPU(s) list: 0-95Thread(s) per core: 1Core(s) per socket: 48座: 2NUMA 节点: 4型号: 0CPU max MHz: 2600.0000CPU min MHz: 200.0000BogoMIPS: 200.00L1d 缓存: 64KL1i 缓存: 64KL2 缓存: 512KL3 缓存: 49152KNUMA 节点0 CPU: 0-23NUMA 节点1 CPU: 24-47NUMA 节点2 CPU: 48-71NUMA 节点3 CPU: 72-95Flags: fp asimd evtstrm aes pmull sha1 sha2 crc32 atomics fphp asimdhp cpuid asimdrdm jsc

我用dstat监控被测目标所在主机资源占用情形(dstat -tcdngym),尤其是cpu负荷;通过expvarmon监控memstats[5]查看目标程序中对各种资源花费情形的排名。

下面是多次测试后制作的一个数据表格:

图:测试数据

3. 对结果的简要剖析受特定场景、测试工具及脚本精确性以及压力测试环境的影响,上面的测试结果有一定局限,但却真实反响了被测目标的性能趋势。我们看到在给予同样压力的情形下,fasthttp并没有10倍于net http的性能,乃至在这样一个特定的场景下,两倍于net/http的性能都没有达到:我们看到在目标主机cpu资源花费靠近70%的几个用例中,fasthttp的性能仅比net/http赶过30%~70%旁边。

那么为什么fasthttp的性能未及预期呢?要回答这个问题,那就要看看net/http和fasthttp各自的实现事理了!

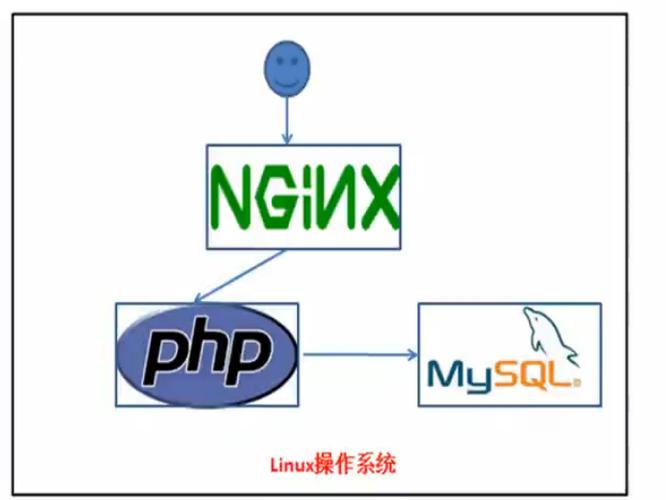

我们先来看看net/http的事情事理示意图:

图:nethttp事情事理示意图

http包作为server真个事理很大略,那便是accept到一个连接(conn)之后,将这个conn甩给一个worker goroutine去处理,后者一贯存在,直到该conn的生命周期结束:即连接关闭。下面是fasthttp的事情事理示意图:

图:fasthttp事情事理示意图

而fasthttp设计了一套机制,目的是只管即便复用goroutine,而不是每次都创建新的goroutine。fasthttp的Server accept一个conn之后,会考试测验从workerpool中的ready切片中取出一个channel,该channel与某个worker goroutine逐一对应。一旦取出channel,就会将accept到的conn写到该channel里,而channel另一真个worker goroutine就会处理该conn上的数据读写。当处理完该conn后,该worker goroutine不会退出,而是会将自己对应的那个channel重新放回workerpool中的ready切片中,等待这下一次被取出。

fasthttp的goroutine复用策略初衷很好,但在这里的测试场景下效果不明显,从测试结果便可看得出来,在相同的客户端并发和压力下,net/http利用的goroutine数量与fasthttp相差无几。这是由测试模型导致的:在我们这个测试中,每个task中的hey都会向被测目标发起固天命量的长连接(keep-alive),然后在每条连接上发起“饱和”要求。这样fasthttp workerpool中的goroutine一旦吸收到某个conn就只能在该conn上的通讯结束后才能重新放回,而该conn直到测试结束才会close,因此这样的场景相称于让fasthttp“退化”成了net/http的模型,也染上了net/http的“毛病”:goroutine的数量一旦多起来,go runtime自身调度所带来的花费便不可忽略乃至超过了业务处理所花费的资源占比。下面分别是fasthttp在200长连接、8000长连接以及16000长连接下的cpu profile的结果:

200长连接:(pprof) top -cumShowing nodes accounting for 88.17s, 55.35% of 159.30s totalDropped 150 nodes (cum <= 0.80s)Showing top 10 nodes out of 60 flat flat% sum% cum cum% 0.46s 0.29% 0.29% 101.46s 63.69% github.com/valyala/fasthttp.(Server).serveConn 0 0% 0.29% 101.46s 63.69% github.com/valyala/fasthttp.(workerPool).getCh.func1 0 0% 0.29% 101.46s 63.69% github.com/valyala/fasthttp.(workerPool).workerFunc 0.04s 0.025% 0.31% 89.46s 56.16% internal/poll.ignoringEINTRIO (inline) 87.38s 54.85% 55.17% 89.27s 56.04% syscall.Syscall 0.12s 0.075% 55.24% 60.39s 37.91% bufio.(Writer).Flush 0 0% 55.24% 60.22s 37.80% net.(conn).Write 0.08s 0.05% 55.29% 60.21s 37.80% net.(netFD).Write 0.09s 0.056% 55.35% 60.12s 37.74% internal/poll.(FD).Write 0 0% 55.35% 59.86s 37.58% syscall.Write (inline)(pprof) 8000长连接:(pprof) top -cumShowing nodes accounting for 108.51s, 54.46% of 199.23s totalDropped 204 nodes (cum <= 1s)Showing top 10 nodes out of 66 flat flat% sum% cum cum% 0 0% 0% 119.11s 59.79% github.com/valyala/fasthttp.(workerPool).getCh.func1 0 0% 0% 119.11s 59.79% github.com/valyala/fasthttp.(workerPool).workerFunc 0.69s 0.35% 0.35% 119.05s 59.76% github.com/valyala/fasthttp.(Server).serveConn 0.04s 0.02% 0.37% 104.22s 52.31% internal/poll.ignoringEINTRIO (inline) 101.58s 50.99% 51.35% 103.95s 52.18% syscall.Syscall 0.10s 0.05% 51.40% 79.95s 40.13% runtime.mcall 0.06s 0.03% 51.43% 79.85s 40.08% runtime.park_m 0.23s 0.12% 51.55% 79.30s 39.80% runtime.schedule 5.67s 2.85% 54.39% 77.47s 38.88% runtime.findrunnable 0.14s 0.07% 54.46% 68.96s 34.61% bufio.(Writer).Flush16000长连接:(pprof) top -cumShowing nodes accounting for 239.60s, 87.07% of 275.17s totalDropped 190 nodes (cum <= 1.38s)Showing top 10 nodes out of 46 flat flat% sum% cum cum% 0.04s 0.015% 0.015% 153.38s 55.74% runtime.mcall 0.01s 0.0036% 0.018% 153.34s 55.73% runtime.park_m 0.12s 0.044% 0.062% 153s 55.60% runtime.schedule 0.66s 0.24% 0.3% 152.66s 55.48% runtime.findrunnable 0.15s 0.055% 0.36% 127.53s 46.35% runtime.netpoll 127.04s 46.17% 46.52% 127.04s 46.17% runtime.epollwait 0 0% 46.52% 121s 43.97% github.com/valyala/fasthttp.(workerPool).getCh.func1 0 0% 46.52% 121s 43.97% github.com/valyala/fasthttp.(workerPool).workerFunc 0.41s 0.15% 46.67% 120.18s 43.67% github.com/valyala/fasthttp.(Server).serveConn 111.17s 40.40% 87.07% 111.99s 40.70% syscall.Syscall(pprof)

通过上述profile的比对,我们创造当长连接数量增多时(即workerpool中goroutine数量增多时),go runtime调度的占比会逐渐提升,在16000连接时,runtime调度的各个函数已经排名前4了。

4. 优化路子从上面的测试结果,我们看到fasthttp的模型不太适宜这种连接连上后进行持续“饱和”要求的场景,更适宜短连接或长连接但没有持续饱和要求,在后面这样的场景下,它的goroutine复用模型才能更好的得以发挥。

但即便“退化”为了net/http模型,fasthttp的性能依然要比net/http略好,这是为什么呢?这些性能提升紧张是fasthttp在内存分配层面的优化trick的结果,比如大量利用sync.Pool,比如避免在[]byte和string互转等。

那么,在持续“饱和”要求的场景下,如何让fasthttp workerpool中goroutine的数量不会因conn的增多而线性增长呢?fasthttp官方没有给出答案,但一条可以考虑的路径是利用os的多路复用(linux上的实现为epoll),即go runtime netpoll利用的那套机制。在多路复用的机制下,这样可以让每个workerpool中的goroutine处理同时处理多个连接,这样我们可以根据业务规模选择workerpool池的大小,而不是像目前这样险些是任意增长goroutine的数量。当然,在用户层面引入epoll也可能会带来系统调用占比的增多以及相应延迟增大等问题。至于该路径是否可行,还是要看详细实现和测试结果。

注:fasthttp.Server中的Concurrency可以用来限定workerpool中并发处理的goroutine的个数,但由于每个goroutine只处理一个连接,当Concurrency设置过小时,后续的连接可能就会被fasthttp谢绝做事。因此fasthttp的默认Concurrency为:

const DefaultConcurrency = 256 1024