来源:51CTO博客

一、数据工具pandas紧张有两种数据工具:Series、DataFrame

注: 后面代码利用pandas版本0.20.1,通过import pandas as pd引入

1. Series

Series是一种带有索引的序列工具。

大略创建如下:

# 通过传入一个序列给pd.Series初始化一个Series工具, 比如lists1=pd.Series(list(\公众1234\"大众))print(s1)0 11 22 33 4dtype:object

2. DataFrame类似与数据库table有行列的数据工具。

创建办法如下:

# 通过传入一个numpy的二维数组或者dict工具给pd.DataFrame初始化一个DataFrame工具# 通过numpy二维数组import numpy as npdf1 = pd.DataFrame(np.random.randn(6,4))print(df1)0 1 2 30 -0.646340 -1.249943 0.393323 -1.5618731 0.371630 0.069426 1.693097 0.9074192 -0.328575 -0.256765 0.693798 -0.7873433 1.875764 -0.416275 -1.028718 0.1582594 1.644791 -1.321506 -0.337425 0.8206895 0.006391 -1.447894 0.506203 0.977295# 通过dict字典df2 = pd.DataFrame({ 'A' : 1.,'B' : pd.Timestamp('20130102'),'C' :pd.Series(1,index=list(range(4)),dtype='float32'),'D' : np.array([3] 4,dtype='int32'),'E' : pd.Categorical([\"大众test\公众,\"大众train\公众,\公众test\公众,\"大众train\公众]),'F' : 'foo' })print(df2)A B C D E F0 1.0 2013-01-02 1.0 3 test foo1 1.0 2013-01-02 1.0 3 train foo2 1.0 2013-01-02 1.0 3 test foo3 1.0 2013-01-02 1.0 3 train foo

3. 索引不管是Series工具还是DataFrame工具都有一个对工具相对应的索引,Series的索引类似于每个元素, DataFrame的索引对应着每一行。查看:在创建工具的时候,每个工具都会初始化一个起始值为0,自增的索引列表, DataFrame同理。

# 打印工具的时候,第一列便是索引print(s1)0 11 22 33 4dtype: object# 或者只查看索引, DataFrame同理print(s1.index)

二、增删查改

这里的增删查改紧张基于DataFrame工具,为了有足足数据用于展示,这里选择tushare的数据。1. tushare安装

pip install tushare

创建数据工具如下:

import tushare as tsdf = ts.get_k_data(\公众000001\公众)

DataFrame 行列,axis 图解:

2. 查询

查看每列的数据类型

# 查看df数据类型df.dtypesdate objectopen float64close float64high float64low float64volume float64code objectdtype: object

查看指定指天命量的行:head函数默认查看前5行,tail函数默认查看后5行,可以通报指定的数值用于查看指定行数。

查看前5行df.headdate open close high low volume code0 2015-12-23 9.927 9.935 10.174 9.871 1039018.0 0000011 2015-12-24 9.919 9.823 9.998 9.744 640229.0 0000012 2015-12-25 9.855 9.879 9.927 9.815 399845.0 0000013 2015-12-28 9.895 9.537 9.919 9.537 822408.0 0000014 2015-12-29 9.545 9.624 9.632 9.529 619802.0 000001# 查看后5行df.taildate open close high low volume code636 2018-08-01 9.42 9.15 9.50 9.11 814081.0 000001637 2018-08-02 9.13 8.94 9.15 8.88 931401.0 000001638 2018-08-03 8.93 8.91 9.10 8.91 476546.0 000001639 2018-08-06 8.94 8.94 9.11 8.89 554010.0 000001640 2018-08-07 8.96 9.17 9.17 8.88 690423.0 000001# 查看前10行df.head(10)date open close high low volume code0 2015-12-23 9.927 9.935 10.174 9.871 1039018.0 0000011 2015-12-24 9.919 9.823 9.998 9.744 640229.0 0000012 2015-12-25 9.855 9.879 9.927 9.815 399845.0 0000013 2015-12-28 9.895 9.537 9.919 9.537 822408.0 0000014 2015-12-29 9.545 9.624 9.632 9.529 619802.0 0000015 2015-12-30 9.624 9.632 9.640 9.513 532667.0 0000016 2015-12-31 9.632 9.545 9.656 9.537 491258.0 0000017 2016-01-04 9.553 8.995 9.577 8.940 563497.0 0000018 2016-01-05 8.972 9.075 9.210 8.876 663269.0 0000019 2016-01-06 9.091 9.179 9.202 9.067 515706.0 000001

查看某一行或多行,某一列或多列

# 查看第一行df[0:1]date open close high low volume code0 2015-12-23 9.927 9.935 10.174 9.871 1039018.0 000001# 查看 10到20行df[10:21]date open close high low volume code10 2016-01-07 9.083 8.709 9.083 8.685 174761.0 00000111 2016-01-08 8.924 8.852 8.987 8.677 747527.0 00000112 2016-01-11 8.757 8.566 8.820 8.502 732013.0 00000113 2016-01-12 8.621 8.605 8.685 8.470 561642.0 00000114 2016-01-13 8.669 8.526 8.709 8.518 391709.0 00000115 2016-01-14 8.430 8.574 8.597 8.343 666314.0 00000116 2016-01-15 8.486 8.327 8.597 8.295 448202.0 00000117 2016-01-18 8.231 8.287 8.406 8.199 421040.0 00000118 2016-01-19 8.319 8.526 8.582 8.287 501109.0 00000119 2016-01-20 8.518 8.390 8.597 8.311 603752.0 00000120 2016-01-21 8.343 8.215 8.558 8.215 606145.0 000001# 查看看Date列前5个数据df[\"大众date\"大众].head # 或者df.date.head0 2015-12-231 2015-12-242 2015-12-253 2015-12-284 2015-12-29Name: date, dtype: object# 查看看Date列,code列, open列前5个数据df[[\公众date\"大众,\公众code\公众, \公众open\"大众]].headdate code open0 2015-12-23 000001 9.9271 2015-12-24 000001 9.9192 2015-12-25 000001 9.8553 2015-12-28 000001 9.8954 2015-12-29 000001 9.545

利用行列组合条件查询

# 查看date, code列的第10行df.loc[10, [\"大众date\公众, \公众code\"大众]]date 2016-01-07code 000001Name: 10, dtype: object# 查看date, code列的第10行到20行df.loc[10:20, [\"大众date\"大众, \公众code\"大众]]date code10 2016-01-07 00000111 2016-01-08 00000112 2016-01-11 00000113 2016-01-12 00000114 2016-01-13 00000115 2016-01-14 00000116 2016-01-15 00000117 2016-01-18 00000118 2016-01-19 00000119 2016-01-20 00000120 2016-01-21 000001# 查看第一行,open列的数据df.loc[0, \"大众open\公众]9.9269999999999996

通过位置查询:值得把稳的是上面的索引值便是特定的位置。

# 查看第1行df.iloc[0]date 2015-12-24open 9.919close 9.823high 9.998low 9.744volume 640229code 000001Name: 0, dtype: object# 查看末了一行df.iloc[-1]date 2018-08-08open 9.16close 9.12high 9.16low 9.1volume 29985code 000001Name: 640, dtype: object# 查看第一列,前5个数值df.iloc[:,0].head0 2015-12-241 2015-12-252 2015-12-283 2015-12-294 2015-12-30Name: date, dtype: object# 查看前2到4行,第1,3列df.iloc[2:4,[0,2]]date close2 2015-12-28 9.5373 2015-12-29 9.624

通过条件筛选:

查看open列大于10的前5行df[df.open > 10].headdate open close high low volume code378 2017-07-14 10.483 10.570 10.609 10.337 1722570.0 000001379 2017-07-17 10.619 10.483 10.987 10.396 3273123.0 000001380 2017-07-18 10.425 10.716 10.803 10.299 2349431.0 000001381 2017-07-19 10.657 10.754 10.851 10.551 1933075.0 000001382 2017-07-20 10.745 10.638 10.880 10.580 1537338.0 000001# 查看open列大于10且open列小于10.6的前五行df[(df.open > 10) & (df.open < 10.6)].headdate open close high low volume code378 2017-07-14 10.483 10.570 10.609 10.337 1722570.0 000001380 2017-07-18 10.425 10.716 10.803 10.299 2349431.0 000001387 2017-07-27 10.550 10.422 10.599 10.363 1194490.0 000001388 2017-07-28 10.441 10.569 10.638 10.412 819195.0 000001390 2017-08-01 10.471 10.865 10.904 10.432 2035709.0 000001# 查看open列大于10或open列小于10.6的前五行df[(df.open > 10) | (df.open < 10.6)].headdate open close high low volume code0 2015-12-24 9.919 9.823 9.998 9.744 640229.0 0000011 2015-12-25 9.855 9.879 9.927 9.815 399845.0 0000012 2015-12-28 9.895 9.537 9.919 9.537 822408.0 0000013 2015-12-29 9.545 9.624 9.632 9.529 619802.0 0000014 2015-12-30 9.624 9.632 9.640 9.513 532667.0 000001

3. 增加

在前面已经大略的解释Series, DataFrame的创建,这里说一些常用有用的创建办法。

# 创建2018-08-08到2018-08-15的韶光序列,默认韶光间隔为Days2 = pd.date_range(\"大众20180808\公众, periods=7)print(s2)DatetimeIndex(['2018-08-08', '2018-08-09', '2018-08-10', '2018-08-11','2018-08-12', '2018-08-13', '2018-08-14'],dtype='datetime64[ns]', freq='D')# 指定2018-08-08 00:00 到2018-08-09 00:00 韶光间隔为小时# freq参数可利用参数, 参考: http://pandas.pydata.org/pandas-docs/stable/timeseries.html#offset-aliasess3 = pd.date_range(\"大众20180808\"大众, \"大众20180809\"大众, freq=\"大众H\"大众)print(s2)DatetimeIndex(['2018-08-08 00:00:00', '2018-08-08 01:00:00','2018-08-08 02:00:00', '2018-08-08 03:00:00','2018-08-08 04:00:00', '2018-08-08 05:00:00','2018-08-08 06:00:00', '2018-08-08 07:00:00','2018-08-08 08:00:00', '2018-08-08 09:00:00','2018-08-08 10:00:00', '2018-08-08 11:00:00','2018-08-08 12:00:00', '2018-08-08 13:00:00','2018-08-08 14:00:00', '2018-08-08 15:00:00','2018-08-08 16:00:00', '2018-08-08 17:00:00','2018-08-08 18:00:00', '2018-08-08 19:00:00','2018-08-08 20:00:00', '2018-08-08 21:00:00','2018-08-08 22:00:00', '2018-08-08 23:00:00','2018-08-09 00:00:00'],dtype='datetime64[ns]', freq='H')# 通过已有序列创建韶光序列s4 = pd.to_datetime(df.date.head)print(s4)0 2015-12-241 2015-12-252 2015-12-283 2015-12-294 2015-12-30Name: date, dtype: datetime64[ns]

4. 修正# 将df 的索引修正为date列的数据,并且将类型转换为datetime类型df.index = pd.to_datetime(df.date)df.headdate open close high low volume code date2015-12-24 2015-12-24 9.919 9.823 9.998 9.744 640229.0 0000012015-12-25 2015-12-25 9.855 9.879 9.927 9.815 399845.0 0000012015-12-28 2015-12-28 9.895 9.537 9.919 9.537 822408.0 0000012015-12-29 2015-12-29 9.545 9.624 9.632 9.529 619802.0 0000012015-12-30 2015-12-30 9.624 9.632 9.640 9.513 532667.0 000001# 修正列的字段df.columns = [\公众Date\"大众, \公众Open\公众,\"大众Close\公众,\"大众High\"大众,\"大众Low\公众,\"大众Volume\"大众,\"大众Code\"大众]print(df.head)Date Open Close High Low Volume Code date2015-12-24 2015-12-24 9.919 9.823 9.998 9.744 640229.0 0000012015-12-25 2015-12-25 9.855 9.879 9.927 9.815 399845.0 0000012015-12-28 2015-12-28 9.895 9.537 9.919 9.537 822408.0 0000012015-12-29 2015-12-29 9.545 9.624 9.632 9.529 619802.0 0000012015-12-30 2015-12-30 9.624 9.632 9.640 9.513 532667.0 000001# 将Open列每个数值加1, apply方法并不直接修正源数据,以是须要将新值复制给dfdf.Open = df.Open.apply(lambda x: x+1)df.headDate Open Close High Low Volume Code date2015-12-24 2015-12-24 10.919 9.823 9.998 9.744 640229.0 0000012015-12-25 2015-12-25 10.855 9.879 9.927 9.815 399845.0 0000012015-12-28 2015-12-28 10.895 9.537 9.919 9.537 822408.0 0000012015-12-29 2015-12-29 10.545 9.624 9.632 9.529 619802.0 0000012015-12-30 2015-12-30 10.624 9.632 9.640 9.513 532667.0 000001# 将Open,Close列都数值上加1,如果多列,apply吸收的工具是全体列df[[\公众Open\"大众, \"大众Close\公众]].head.apply(lambda x: x.apply(lambda x: x+1))Open Closedate2015-12-24 11.919 10.8232015-12-25 11.855 10.8792015-12-28 11.895 10.5372015-12-29 11.545 10.6242015-12-30 11.624 10.632

5. 删除通过drop方法drop指定的行或者列。

把稳: drop方法并不直接修正源数据,如果须要使源dataframe工具被修正,须要传入inplace=True,通过之前的axis图解,知道行的值(或者说label)在axis=0,列的值(或者说label)在axis=1。

# 删除指定列,删除Open列df.drop(\"大众Open\公众, axis=1).head #或者df.drop(df.columns[1])Date Close High Low Volume Code date2015-12-24 2015-12-24 9.823 9.998 9.744 640229.0 0000012015-12-25 2015-12-25 9.879 9.927 9.815 399845.0 0000012015-12-28 2015-12-28 9.537 9.919 9.537 822408.0 0000012015-12-29 2015-12-29 9.624 9.632 9.529 619802.0 0000012015-12-30 2015-12-30 9.632 9.640 9.513 532667.0 000001# 删除第1,3列. 即Open,High列df.drop(df.columns[[1,3]], axis=1).head # 或df.drop([\"大众Open\"大众, \公众High], axis=1).headDate Close Low Volume Code date2015-12-24 2015-12-24 9.823 9.744 640229.0 0000012015-12-25 2015-12-25 9.879 9.815 399845.0 0000012015-12-28 2015-12-28 9.537 9.537 822408.0 0000012015-12-29 2015-12-29 9.624 9.529 619802.0 0000012015-12-30 2015-12-30 9.632 9.513 532667.0 000001

三、pandas常用函数

1. 统计

# descibe方法司帐算每列数据工具是数值的count, mean, std, min, max, 以及一定比率的值df.describeOpen Close High Low Volumecount 641.0000 641.0000 641.0000 641.0000 641.0000mean 10.7862 9.7927 9.8942 9.6863 833968.6162std 1.5962 1.6021 1.6620 1.5424 607731.6934min 8.6580 7.6100 7.7770 7.4990 153901.000025% 9.7080 8.7180 8.7760 8.6500 418387.000050% 10.0770 9.0960 9.1450 8.9990 627656.000075% 11.8550 10.8350 10.9920 10.7270 1039297.0000max 15.9090 14.8600 14.9980 14.4470 4262825.0000# 单独统计Open列的均匀值df.Open.mean10.786248049922001# 查看居于95%的值, 默认线性拟合df.Open.quantile(0.95)14.187# 查看Open列每个值涌现的次数df.Open.value_counts.head9.8050 129.8630 109.8440 109.8730 109.8830 8Name: Open, dtype: int64

2. 缺失落值处理

删除或者添补缺失落值。

# 删除含有NaN的任意行df.dropna(how='any')# 删除含有NaN的任意列df.dropna(how='any', axis=1)# 将NaN的值改为5df.fillna(value=5)

3. 排序按行或者列排序, 默认也不修正源数据。

# 按列排序df.sort_index(axis=1).headClose Code Date High Low Open Volumedate2015-12-24 9.8230 000001 2015-12-24 9.9980 9.7440 10.9190 640229.00002015-12-25 1.0000 000001 2015-12-25 1.0000 9.8150 10.8550 399845.00002015-12-28 1.0000 000001 2015-12-28 1.0000 9.5370 10.8950 822408.00002015-12-29 9.6240 000001 2015-12-29 9.6320 9.5290 10.5450 619802.00002015-12-30 9.6320 000001 2015-12-30 9.6400 9.5130 10.6240 532667.0000# 按行排序,不递增df.sort_index(ascending=False).headDate Open Close High Low Volume Codedate2018-08-08 2018-08-08 10.1600 9.1100 9.1600 9.0900 153901.0000 0000012018-08-07 2018-08-07 9.9600 9.1700 9.1700 8.8800 690423.0000 0000012018-08-06 2018-08-06 9.9400 8.9400 9.1100 8.8900 554010.0000 0000012018-08-03 2018-08-03 9.9300 8.9100 9.1000 8.9100 476546.0000 0000012018-08-02 2018-08-02 10.1300 8.9400 9.1500 8.8800 931401.0000 000001

安装某一列的值排序

# 按照Open列的值从小到大排序df.sort_values(by=\"大众Open\公众)Date Open Close High Low Volume Codedate 2016-03-01 2016-03-01 8.6580 7.7220 7.7770 7.6260 377910.0000 0000012016-02-15 2016-02-15 8.6900 7.7930 7.8410 7.6820 278499.0000 0000012016-01-29 2016-01-29 8.7540 7.9610 8.0240 7.7140 544435.0000 0000012016-03-02 2016-03-02 8.7620 8.0400 8.0640 7.7380 676613.0000 0000012016-02-26 2016-02-26 8.7770 7.7930 7.8250 7.6900 392154.0000 000001

4. 合并concat, 按照行方向或者列方向合并。

# 分别取0到2行,2到4行,4到9行组成一个列表,通过concat方法按照axis=0,行方向合并, axis参数不指定,默认为0split_rows = [df.iloc[0:2,:],df.iloc[2:4,:], df.iloc[4:9]]pd.concat(split_rows)Date Open Close High Low Volume Codedate2015-12-24 2015-12-24 10.9190 9.8230 9.9980 9.7440 640229.0000 0000012015-12-25 2015-12-25 10.8550 1.0000 1.0000 9.8150 399845.0000 0000012015-12-28 2015-12-28 10.8950 1.0000 1.0000 9.5370 822408.0000 0000012015-12-29 2015-12-29 10.5450 9.6240 9.6320 9.5290 619802.0000 0000012015-12-30 2015-12-30 10.6240 9.6320 9.6400 9.5130 532667.0000 0000012015-12-31 2015-12-31 10.6320 9.5450 9.6560 9.5370 491258.0000 0000012016-01-04 2016-01-04 10.5530 8.9950 9.5770 8.9400 563497.0000 0000012016-01-05 2016-01-05 9.9720 9.0750 9.2100 8.8760 663269.0000 0000012016-01-06 2016-01-06 10.0910 9.1790 9.2020 9.0670 515706.0000 000001# 分别取2到3列,3到5列,5列及往后列数组成一个列表,通过concat方法按照axis=1,列方向合并split_columns = [df.iloc[:,1:2], df.iloc[:,2:4], df.iloc[:,4:]]pd.concat(split_columns, axis=1).headOpen Close High Low Volume Code date2015-12-24 10.9190 9.8230 9.9980 9.7440 640229.0000 0000012015-12-25 10.8550 1.0000 1.0000 9.8150 399845.0000 0000012015-12-28 10.8950 1.0000 1.0000 9.5370 822408.0000 0000012015-12-29 10.5450 9.6240 9.6320 9.5290 619802.0000 0000012015-12-30 10.6240 9.6320 9.6400 9.5130 532667.0000 000001

追加行, 相应的还有insert, 插入插入到指定位置

# 将第一行追加到末了一行df.append(df.iloc[0,:], ignore_index=True).tailDate Open Close High Low Volume Code637 2018-08-03 9.9300 8.9100 9.1000 8.9100 476546.0000 000001638 2018-08-06 9.9400 8.9400 9.1100 8.8900 554010.0000 000001639 2018-08-07 9.9600 9.1700 9.1700 8.8800 690423.0000 000001640 2018-08-08 10.1600 9.1100 9.1600 9.0900 153901.0000 000001641 2015-12-24 10.9190 9.8230 9.9980 9.7440 640229.0000 000001

5. 工具复制由于dataframe是引用工具,以是须要显示调用copy方法用以复制全体dataframe工具。

四、绘图

pandas的绘图是利用matplotlib,如果想要画的更细致, 可以利用matplotplib,不过大略的画一些图还是不错的。

由于上图太麻烦,这里就不配图了,可以在资源文件里面查看pandas-blog.ipynb文件或者自己敲一遍代码。

# 这里利用notbook,为了直接在输出中显示,须要以下配置%matplotlib inline# 绘制Open,Low,Close.High的线性图df[[\"大众Open\公众, \"大众Low\"大众, \"大众High\公众, \"大众Close\"大众]].plot# 绘制面积图df[[\"大众Open\"大众, \"大众Low\"大众, \"大众High\"大众, \公众Close\"大众]].plot(kind=\公众area\"大众)

五、数据读写

读写常见文件格式,如csv,excel,json等,乃至是读取“系统的剪切板”这个功能有时候很有用。直接将鼠标选中复制的内容读取创建dataframe工具。

# 将df数据保存到当前事情目录的stock.csv文件df.to_csv(\"大众stock.csv\"大众)# 查看stock.csv文件前5行with open(\"大众stock.csv\"大众) as rf:print(rf.readlines[:5])['date,Date,Open,Close,High,Low,Volume,Code\n', '2015-12-24,2015-12-24,9.919,9.823,9.998,9.744,640229.0,000001\n', '2015-12-25,2015-12-25,9.855,9.879,9.927,9.815,399845.0,000001\n', '2015-12-28,2015-12-28,9.895,9.537,9.919,9.537,822408.0,000001\n', '2015-12-29,2015-12-29,9.545,9.624,9.632,9.529,619802.0,000001\n']# 读取stock.csv文件并将第一行作为indexdf2 = pd.read_csv(\"大众stock.csv\"大众, index_col=0)df2.headDate Open Close High Low Volume Codedate2015-12-24 2015-12-24 9.9190 9.8230 9.9980 9.7440 640229.0000 12015-12-25 2015-12-25 9.8550 9.8790 9.9270 9.8150 399845.0000 12015-12-28 2015-12-28 9.8950 9.5370 9.9190 9.5370 822408.0000 12015-12-29 2015-12-29 9.5450 9.6240 9.6320 9.5290 619802.0000 12015-12-30 2015-12-30 9.6240 9.6320 9.6400 9.5130 532667.0000 1# 读取stock.csv文件并将第一行作为index,并且将000001作为str类型读取, 不然会被解析成整数df2 = pd.read_csv(\"大众stock.csv\"大众, index_col=0, dtype={\"大众Code\公众: str})df2.head

六、大略实例

这里以处理web日志为例,大概不太实用,由于ELK处理这些绰绰有余,不过喜好什么自己来也未尝不可。

1. 剖析access.log

日志文件: https://raw.githubusercontent.com/Apache-Labor/labor/master/labor-04/labor-04-example-access.log

2. 日志格式及示例

# 日志格式# 字段解释, 参考:https://ru.wikipedia.org/wiki/Access.log%h%l%u%t \“%r \”%> s%b \“%{Referer} i \”\“%{User-Agent} i \”# 详细示例75.249.65.145 US - [2015-09-02 10:42:51.003372] \"大众GET /cms/tina-access-editor-for-download/ HTTP/1.1\"大众 200 7113 \"大众-\公众 \公众Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)\"大众 www.example.com 124.165.3.7 443 redirect-handler - + \"大众-\"大众 Vea2i8CoAwcAADevXAgAAAAB TLSv1.2 ECDHE-RSA-AES128-GCM-SHA256 701 12118 -% 88871 803 0 0 0 0

3. 读取并解析日志文件解析日志文件HOST = r'^(?P<host>.?)'SPACE = r'\s'IDENTITY = r'\S+'USER = r\公众\S+\"大众TIME = r'\[(?P<time>.?)\]'# REQUEST = r'\\"大众(?P<request>.?)\\公众'REQUEST = r'\\公众(?P<method>.+?)\s(?P<path>.+?)\s(?P<http_protocol>.?)\\"大众'STATUS = r'(?P<status>\d{3})'SIZE = r'(?P<size>\S+)'REFER = r\公众\S+\"大众USER_AGENT = r'\\公众(?P<user_agent>.?)\\"大众'REGEX = HOST+SPACE+IDENTITY+SPACE+USER+SPACE+TIME+SPACE+REQUEST+SPACE+STATUS+SPACE+SIZE+SPACE+IDENTITY+USER_AGENT+SPACEline = '79.81.243.171 - - [30/Mar/2009:20:58:31 +0200] \"大众GET /exemples.php HTTP/1.1\"大众 200 11481 \公众http://www.facades.fr/\公众 \"大众Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; .NET CLR 1.0.3705; .NET CLR 1.1.4322; Media Center PC 4.0; .NET CLR 2.0.50727)\"大众 \"大众-\"大众'reg = re.compile(REGEX)reg.match(line).groups

将数据注入DataFrame工具

COLUMNS = [\"大众Host\"大众, \公众Time\"大众, \"大众Method\公众, \公众Path\"大众, \"大众Protocol\"大众, \公众status\"大众, \"大众size\"大众, \公众User_Agent\"大众]field_lis =with open(\公众access.log\公众) as rf:for line in rf:# 由于一些记录不能匹配,以是须要捕获非常, 不能捕获的数据格式如下# 80.32.156.105 - - [27/Mar/2009:13:39:51 +0100] \"大众GET HTTP/1.1\公众 400 - \"大众-\公众 \公众-\"大众 \"大众-\公众# 由于重点不在写正则表达式这里就略过了try:fields = reg.match(line).groupsexcept Exception as e:#print(e)#print(line)passfield_lis.append(fields)log_df = pd.DataFrame(field_lis)# 修正列名log_df.columns = COLUMNSdef parse_time(value):try:return pd.to_datetime(value)except Exception as e:print(e)print(value)# 将Time列的值修正成pandas可解析的韶光格式log_df.Time = log_df.Time.apply(lambda x: x.replace(\"大众:\公众, \公众 \公众, 1))log_df.Time = log_df.Time.apply(parse_time)# 修正index, 将Time列作为index,并drop掉在Time列log_df.index = pd.to_datetime(log_df.Time)log_df.drop(\"大众Time\公众, inplace=True)log_df.headHost Time Method Path Protocol status size User_AgentTime2009-03-22 06:00:32 88.191.254.20 2009-03-22 06:00:32 GET / HTTP/1.0 200 8674 \"大众-2009-03-22 06:06:20 66.249.66.231 2009-03-22 06:06:20 GET /popup.php?choix=-89 HTTP/1.1 200 1870 \"大众Mozilla/5.0 (compatible; Googlebot/2.1; +htt...2009-03-22 06:11:20 66.249.66.231 2009-03-22 06:11:20 GET /specialiste.php HTTP/1.1 200 10743 \"大众Mozilla/5.0 (compatible; Googlebot/2.1; +htt...2009-03-22 06:40:06 83.198.250.175 2009-03-22 06:40:06 GET / HTTP/1.1 200 8714 \"大众Mozilla/4.0 (compatible; MSIE 7.0; Windows N...2009-03-22 06:40:06 83.198.250.175 2009-03-22 06:40:06 GET /style.css HTTP/1.1 200 1692 \"大众Mozilla/4.0 (compatible; MSIE 7.0; Windows N...

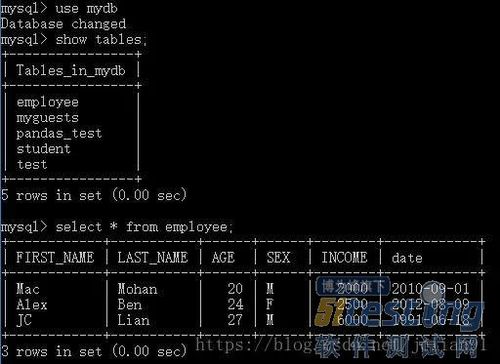

查看数据类型

# 查看数据类型log_df.dtypesHost objectTime datetime64[ns]Method objectPath objectProtocol objectstatus objectsize objectUser_Agent objectdtype: object

由上可知, 除了Time字段是韶光类型,其他都是object,但是Size, Status该当为数字

def parse_number(value):try:return pd.to_numeric(value)except Exception as e:passreturn 0# 将Size,Status字段值改为数值类型log_df[[\公众Status\"大众,\"大众Size\公众]] = log_df[[\"大众Status\"大众,\公众Size\"大众]].apply(lambda x: x.apply(parse_number))log_df.dtypesHost objectTime datetime64[ns]Method objectPath objectProtocol objectStatus int64Size int64User_Agent objectdtype: object

统计status数据

# 统计不同status值的次数log_df.Status.value_counts200 5737304 1540404 1186400 251302 37403 3206 2Name: Status, dtype: int64

绘制pie图

log_df.Status.value_counts.plot(kind=\"大众pie\公众, figsize=(10,8))

查看日志文件韶光跨度

log_df.index.max - log_df.index.minTimedelta('15 days 11:12:03')

分别查看起始,终止韶光

print(log_df.index.max)print(log_df.index.min)2009-04-06 17:12:352009-03-22 06:00:32

按照此方法还可以统计Method, User_Agent字段 ,不过User_Agent还须要额外洗濯以下数据。

统计top 10 IP地址

91.121.31.184 74588.191.254.20 44141.224.252.122 420194.2.62.185 25586.75.35.144 184208.89.192.106 17079.82.3.8 16190.3.72.207 15762.147.243.132 15081.249.221.143 141Name: Host, dtype: int64

绘制要求走势图

log_df2 = log_df.copy# 为每行加一个request字段,值为1log_df2[\"大众Request\"大众] = 1# 每一小时统计一次request数量,并将NaN值替代为0,末了绘制线性图,尺寸为16x9log_df2.Request.resample(\公众H\"大众).sum.fillna(0).plot(kind=\"大众line\"大众,figsize=(16,10))

分别绘图

分别对202,304,404状态重新取样,并放在一个列表里面req_df_lis = [log_df2[log_df2.Status == 200].Request.resample(\"大众H\公众).sum.fillna(0),log_df2[log_df2.Status == 304].Request.resample(\公众H\公众).sum.fillna(0),log_df2[log_df2.Status == 404].Request.resample(\公众H\"大众).sum.fillna(0)]# 将三个dataframe组合起来req_df = pd.concat(req_df_lis,axis=1)req_df.columns = [\公众200\公众, \"大众304\"大众, \"大众404\公众]# 绘图req_df.plot(figsize=(16,10))