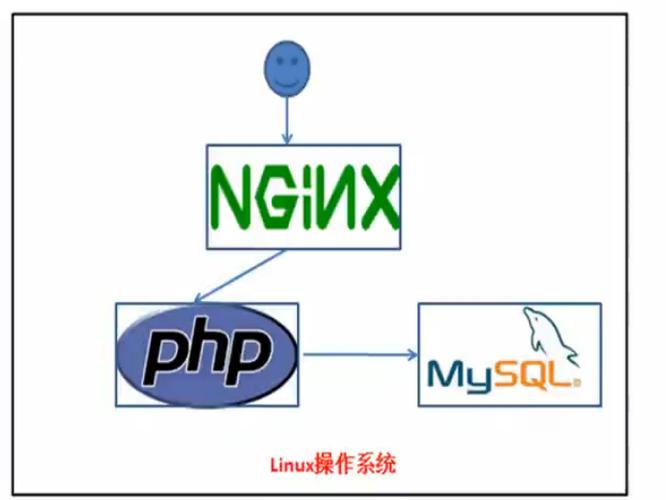

Thrift是一种接口描述措辞和二进制通讯协议,它被用来定义和创建跨措辞的做事。常日被当作RPC框架来利用,最初是Facebook为理解决大规模的跨措辞做事调用而设计开拓出来的,Thrift架构图如下:

从上图可以看出Thrift采取了分层架构,观点非常清晰,便于大家理解利用,从下至上依次为传输层、协议层和处理层;

理解完Thrift架构,我们通过一个大略的例子来理解Thrift是如何事情的。Thrift定义了一种接口描述措辞IDL,IDL文件可以被Thrift代码天生期处理天生目标措辞的代码,我们定义了一个大略的IDL文件tutorial.thrift文件,内容如下:

#cat tutorial.thriftserver Calculator{ void ping(), i32 add(i:i32 num1, 2:i32 num2)}

文件中我们定义了一个名为calculator的service,包含两个接口ping和add,环绕这个文件我们分别实现客户端和做事端代码,在编写代码之前须要利用如下命令天生目标措辞代码:

thrift -gen py(更换py为其他措辞可以天生对应措辞的) tutorial.thrift

以python为例实现做事端代码:

import sys, globsys.path.append('gen-py')sys.path.insert(0, glob.glob('../../lib/py/build/lib.')[0])from tutorial import Calculatorfrom tutorial.ttypes import from shared.ttypes import SharedStructfrom thrift.transport import TSocketfrom thrift.transport import TTransportfrom thrift.protocol import TBinaryProtocolfrom thrift.server import TServerclass CalculatorHandler: def __init__(self): self.log = {} def ping(self): print 'ping()' def add(self, n1, n2): print 'add(%d,%d)' % (n1, n2) return n1+n2handler = CalculatorHandler()processor = Calculator.Processor(handler)transport = TSocket.TServerSocket(port=9090)tfactory = TTransport.TBufferedTransportFactory()pfactory = TBinaryProtocol.TBinaryProtocolFactory()server = TServer.TSimpleServer(processor, transport, tfactory, pfactory)# You could do one of these for a multithreaded server#server = TServer.TThreadedServer(processor, transport, tfactory, pfactory)#server = TServer.TThreadPoolServer(processor, transport, tfactory, pfactory)print 'Starting the server...'server.serve()print 'done.'

全体做事端须要我们实现的便是处理层,决定如果处理客户群传输过来的数据,对应CalculatorHandler实现了接口中定义的ping和add方法,分别输出\"大众ping()\公众和实现整数加法,将handler作为参数天生Processor,传输层利用TServerSocket监听9090端口,传输层利用TBinaryProtocol同时利用Buffer进行读写(用TBufferedTransport进行装饰),可以选用不同的Server模型,测试利用TSimpleServer,将传输层、协议层、处理层以及Server组合到一起就完玉成体做事端开拓事情;在看下客户端实现:

import sys, globsys.path.append('gen-py')sys.path.insert(0, glob.glob('../../lib/py/build/lib.')[0])from tutorial import Calculatorfrom tutorial.ttypes import from thrift import Thriftfrom thrift.transport import TSocketfrom thrift.transport import TTransportfrom thrift.protocol import TBinaryProtocoltry: # Make socket transport = TSocket.TSocket('localhost', 9090) # Buffering is critical. Raw sockets are very slow transport = TTransport.TBufferedTransport(transport) # Wrap in a protocol protocol = TBinaryProtocol.TBinaryProtocol(transport) # Create a client to use the protocol encoder client = Calculator.Client(protocol) # Connect! transport.open() client.ping() print 'ping()' sum = client.add(1,1) print '1+1=%d' % (sum) # Close! transport.close()except Thrift.TException, tx: print '%s' % (tx.message)

客户真个实现也很大略传输层利用TSocket访问本地9090端口同时利用buffer进行读写(TBufferedTransport进行装饰),协议层利用TBinaryProtocol,初始化Calculator.Client(根据IDL自动天生代码),我就可以利用client进行方法的调用;

上面这个例子完全的解释了如何通过Thrift实现自己的业务逻辑,通过Thrift访问HBase同上面实质上是完备一样的,只不过HBase供应了更多的接口而已,HBase供应了相应的IDL文件Hbase.thrift,通过该文件可以天生对应措辞的客户端代码,还是已python为例实现基本的CURD操作,代码如下:

from thrift.transport import TSocketfrom thrift.transport import TTransportfrom thrift.protocol import TBinaryProtocol# hbase 客户端代码是由 thrift -gen py hbase.thrift 天生,拷贝到工程目录下from hbase.THBaseService import Clientfrom hbase.ttypes import TScan, TColumn, TGet, TPut, TColumnValue, TableDescriptor, ColumnDescriptor, TDeleteclass HbaseClient(object): def __init__(self, host='hbasetest02.et2sqa.tbsite.net', port=9090): self.transport = TTransport.TBufferedTransport(TSocket.TSocket(host, port)) protocol = TBinaryProtocol.TBinaryProtocol(self.transport) self.client = Client(protocol) def list_tables(self): self.transport.open() tables = self.client.listTables() self.transport.close() return tables def scan_data(self, table, startRow, stopRow, limit, columns=None): self.transport.open() if columns: columns = [TColumn(i.split(':')) for i in columns] scan = TScan(startRow=startRow, stopRow=stopRow, columns=columns) rows = self.client.scan(table, scan, limit) self.transport.close() return rows def get(self, table, row=None, columns=None): self.transport.open() if columns: columns = [TColumn(i.split(':')) for i in columns] get = TGet(row=row, columns=columns) res = self.client.get(table, get) self.transport.close() return res def put(self, table, row, columnValues): self.transport.open() put = TPut(row, columnValues) self.client.put(table, put) self.transport.close() def table_exists(self, table): self.transport.open() res = self.client.tableExists(table) self.transport.close() return res def delete_single(self, table, delete): self.transport.open() self.client.deleteSingle(table, delete) self.transport.close() def create_table(self, tableDescriptor, splitKeys): self.transport.open() self.client.createTable(tableDescriptor, splitKeys) self.transport.close() def enable_table(self, table): self.transport.open() self.client.enableTable(table) self.transport.close() def disable_table(self, table): self.transport.open() self.client.disableTable(table) self.transport.close()if __name__ == '__main__': client = HbaseClient() print client.list_tables() print client.scan_data('bear_test', startRow='row1', stopRow='row5', limit=10, columns=['f1:name']) print client.get('bear_test', 'row1', ['f1:age']) columnValues = [TColumnValue('f1', 'name', 'bear3')] client.put('bear_test', 'row4', columnValues) print client.table_exists('bear_test') delete = TDelete('row4') client.delete_single('bear_test', delete) print client.scan_data('bear_test', startRow='row1', stopRow='row5', limit=10, columns=['f1:name']) tableDescriptor = TableDescriptor('bear_test1', families=[ColumnDescriptor(name='f1', maxVersions=1)]) client.create_table(tableDescriptor, None) print client.table_exists('bear_test1')

对付上述例子先容下几个关键的点,HBase Thrift Server默认利用TThreadPoolServer做事模型(在并发数可控的情形下利用该做事模型性能最好),相应的客户端传输层利用TTransport.TBufferedTransport(TSocket.TSocket(host, port))以及协议层TBinaryProtocol同做事端进行通信;同时其余一个须要把稳的点便是Client本身并不是线程安全的,每一个线程要利用单独的Client;

阿里云HBase本身供应了高可用版本的ThriftServer,通过负载均衡将流量分散到不同的Thrift Server上,最大程度上担保做事的高可用和高性能,利用户可以不必关系资源、支配以及运维,将精力都放在业务逻辑的实现上,云HBase的连接地址以及利用可以参考(https://help.aliyun.com/document_detail/87068.html);学会了如何利用客户端,同时云HBase Thrift Server免运维,那么唯一须要关心的便是监控了,每一个云HBase实例多支配了ganglia监控,可以在云HBase实例-》数据库连接-》UI访问中找到:

点击Ganglia进入到主页,在主页最下面可以找到hbase_cluster。

点击hbase_cluster进入到hbase监控详情:

详情页的最上面为韶光设置,可以选择hour、2hr、4hr平分歧的韶光纬度,也可以在from、to中设置自定义韶光

详情页的下面为指标选择:

thrift干系的指标都因此thrift-one和thrift-two开头的,分别对应thrift1和thrift2,thrift默认利用TThreadPoolServer做事模型,利用thrift紧张关注3种监控指标:

thrift-one.Thrift.numActiveWorkers:表示当前生动的事情线程,默认的最大线程数为1000,通过该指标可以判断当前thrift server的负载情形thrift-one.Thrift.callQueueLen:表示要求的行列步队大小,默认行列步队长度为1000,该指标同thrift-one.Thrift.numActiveWorkers一同反应thrift server的负载情形thrift-one.Thrift.方法名_开头的各种指标:表示对应方法的thrift server耗时,以batchGet方法为例thrift-one.Thrift.batchGet_max、thrift-one.Thrift.batchGet_mean以及thrift-one.Thrift.batchGet_min分别表示batchGet的最大、均匀以及最小耗时。作者:修者

本文为云栖社区内容,未经许可不得转载。